- Home

- >

- Software Development

- >

- Kubernetes Management – 5 things Developers need to know

Kubernetes management can be daunting for developers who don’t have a specialized understanding of orchestration technology. Learning Kubernetes takes practice and time, a precious commodity for devs who are under pressure to deliver new applications.

This post provides direction on what you need to know and what you can skip taking advantage of Kubernetes. Let’s start with five things you need to know.

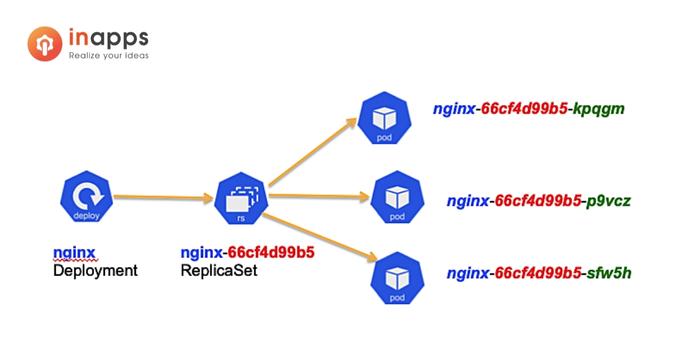

1. Kubernetes Naming Conventions

Each Kubernetes object has a name that’s unique for a type of resource and a uniquely identified object (UID) that’s unique across an entire cluster. Kubernetes.io does a great job of explaining this by saying, “You can only have one Pod named myapp-1234 within the same namespace, but you can have one Pod and one Deployment that are each named myapp-1234.” In addition, pods that have the same higher-level controller (e.g. ReplicaSets/Deployments) will inherit the same naming prefix.

For example, if your deployment name is ‘webapp,’ a corresponding ReplicaSet could be webapp-12345678, and the corresponding pod under that could be webapp-12345678-abcde. This way, every pod in this namespace has a unique name, but it is to tell which pods share the same controller and thus the same specification.

2. How to Get Your Application to Run

New Kubernetes users should have a basic understanding of services and deployments as they factor into running applications.

Services are just a general way to route network traffic within the cluster, and sometimes from outside too. It’s fairly easy to define and allows easily human-readable configuration for where traffic goes. An example would be a service named ‘webapp’ that routes traffic to pods with the label ‘app: web.’ Any pod in the same namespace can make calls to the webapp pods by making a request to the URL ‘http://webapp’.

Traditionally, in setting up networking, there are a number of steps that go into ensuring traffic routes to the right place, but using services in Kubernetes enables you to give directions at a higher level and the resulting action is automated.

Deployments are the simplest high-level controller. In stateless applications, it is enough for developers to understand that deployments will handle rolling updates with simple instructions, such as how many copies you want and what version you’re on.

This ensures that your application connects to the correct endpoints; everything in between happens automatically. For more complex applications, it may be worth exploring some of the other high-level controllers, like StatefulSets and Cron jobs.

It is also helpful to understand the main principle of containers: How do I get to a consistent state? If an update or action causes an application to get into a bad state, just restarting the container resets it.

The container may not be on the same node, and you might not have the same set of interactions, but ultimately the most important things are the same as when you started.

3. How to Get Information About Your Application

Regardless of what infrastructure you are running on, this is what most developers care about. What went wrong, where did it go wrong, how critical is it and is it my problem? That last question may seem a bit cynical, but knowing which team and developer are best suited to solve a problem is crucial for operational efficiency.

The primary questions you need answered are: How do I tell if my application is running, and what does resource consumption look like?

A helpful point to note is that employing the command “kubectl get events” will provide you with all of your application events.

Unfortunately, Kubernetes doesn’t order them in any particular way, but you can specify terms and things in these queries as you improve your knowledge and understanding.

4. How to Spot Problems Before They Affect Your Application

One of the benefits of Kubernetes is its ability to automatically return to a consistent state, but this can mask the effects of underlying problems for developers who do not know what to look for. For this reason, it is important that developers have access to Kubernetes logs and telemetry.

Metrics like CPUs or memory used become even more important for Kubernetes-based applications because they can reveal issues with applications that Kubernetes may have masked. Surfacing problems before they affect customers is crucial to maintaining positive application experiences.

5. When to Investigate Problems

At its core, Kubernetes is about keeping applications running for production, so there is no direct output that explains why an app crashed or compels developers to take an active role in remediation.

In most cases, Kubernetes just restarts the containers, and the problem is resolved. While it’s not an immediate issue as a result of the self-healing and resilient nature of Kubernetes and containers, it’s worth noting that this can mask or hide bad application behavior, which may cause it to go unnoticed for longer.

There are times when it is worth a deeper investigation into an issue, like if it’s affecting customer experience or causing negative downstream effects to other teams. The type of service being provided and the impact that performance issues have on customers can also come into play.

For instance, requirements for applications supporting defense contractors will differ dramatically from those for a grocery app.

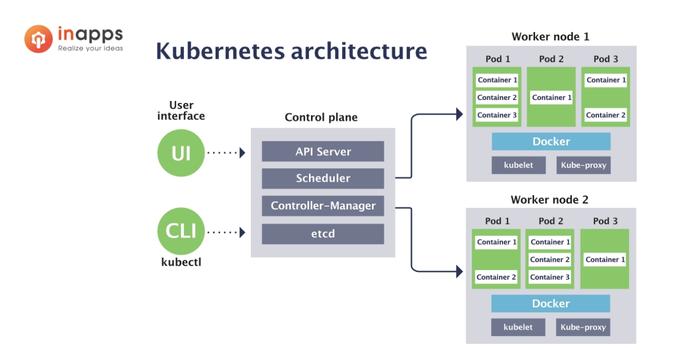

You Can Skip Deep Understanding of the Process

Although it’s essential for developers to understand how their applications run on Kubernetes, in most cases, they don’t need a deep understanding of how Kubernetes works under the hood. As a developer, Kubernetes may seem like chaos to some degree, but you can control the chaos by providing specific directions to follow.

For example, if you don’t specify where you want your pod to run, it will run anywhere that applications are allowed to run by default. Or, you can use taints/tolerations to tell Kubernetes not to run applications on specific nodes. However you configure (or don’t configure) this, you can use basic automated checks to show if the app is healthy.

When getting started with Kubernetes, don’t overcomplicate it. Look at it as a way of managing your applications with a system that automatically takes action to repair itself and return to a healthy state with little intervention.

The basics outlined in this post will provide a great starting point for developers to build a foundation and focus their education. As their knowledge and experience grow, they can expand their implementation of Kubernetes to be as specific and robust as needed.

Lastly, if ever you feel the need to learn more about technologies, frameworks, languages, and more – InApps will always have you updated.

List of Keywords users find our article on Google

[sociallocker id=”2721″]

| software |

| custom application development |

| kubernetes developer jobs |

| about kubernetes |

| connect to logdna with |

| elasticsearch kubernetes |

| grocery app development company |

| quantum blockchain |

| directions to wawa |

| directions wawa |

| how to get your name on wikipedia |

| connect to logdna |

| district manager wikipedia |

| specialized app mission control |

| frame.io jobs |

| kubernetes youtube |

| elasticsearch in action |

| self understanding wikipedia |

| kubernetes.io |

| elasticsearch return specific fields |

| facebook app namespace |

| kubernetes practice labs |

| kubernetes cluster version management |

| what is the most popular example of specialized software for managing projects |

[/sociallocker]

Let’s create the next big thing together!

Coming together is a beginning. Keeping together is progress. Working together is success.