- Home

- >

- Software Development

- >

- Wasm Modules and Envoy Extensibility Explained, Part 1 – InApps Technology 2022

Wasm Modules and Envoy Extensibility Explained, Part 1 – InApps Technology is an article under the topic Software Development Many of you are most interested in today !! Today, let’s InApps.net learn Wasm Modules and Envoy Extensibility Explained, Part 1 – InApps Technology in today’s post !

Read more about Wasm Modules and Envoy Extensibility Explained, Part 1 – InApps Technology at Wikipedia

You can find content about Wasm Modules and Envoy Extensibility Explained, Part 1 – InApps Technology from the Wikipedia website

Peter Jausovec

Peter is a software engineer and content creator at Tetrate, with expertise in distributed systems and cloud native solutions. He is the author of books and blogs on cloud native, Kubernetes and Istio, and is the creator of Istio Fundamentals, a free introductory course on Istio from Tetrate Academy.

If you’ve ever wondered what WebAssembly (Wasm) is and how it fits into the service mesh ecosystem, this is the article you want to read.

We’ll explain what Wasm can do and set the stage by talking a bit about extensibility in Envoy Proxy. Envoy is a service (sidecar) and edge proxy (gateway) that can be configured through APIs. Next we’ll talk about how Wasm fits into Envoy, covering WasmService and Proxy-Wasm.

This article is written for Wasm beginners, and we assume you don’t have any in-depth Wasm knowledge. In a companion article, we’ll explore Wasm using Kubernetes and Istio so you can be comfortable using both. You can check out the Istio Fundamentals course to learn how to get started with Istio.

What Is WebAssembly (Wasm)?

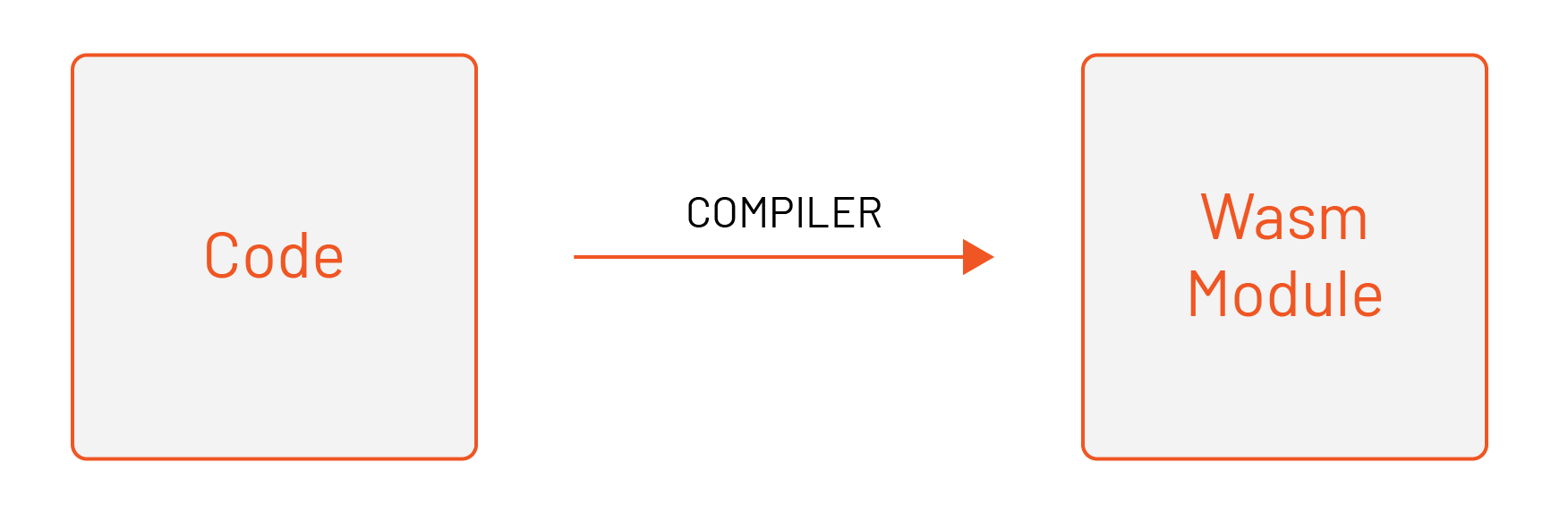

WebAssembly, more commonly known as Wasm, is a portable binary format for executable code that relies on an open standard. Developers code in their preferred language and compile that into a Wasm module. While there are implementation details to writing for Wasm, using a familiar source language helps developers get started quickly.

The Wasm module is isolated from the host environment and executed in a memory-safe sandbox called a virtual machine (VM). The modules can communicate with the host environment through an API.

The main goal of Wasm is to enable high-performance applications on web pages. For example, let’s say you’re building a web application with JavaScript. You could write some code in Go, or potentially any other language, and compile it into a binary file (Wasm module). You could then run the compiled Wasm module in the same sandbox as your JavaScript web application.

Initially, Wasm was designed to run in the web browser. However, you can embed virtual machines into other host applications and execute them. This is exactly what Envoy does.

Envoy and Extensibility

Before we talk about Wasm in the context of Envoy, let’s mention a couple of ways we can extend Envoy. At the heart of the Envoy proxy lies a variety of filters that provide features such as network routing, observability and security.

With a combination of different filters (network, HTTP filters), you can augment the incoming requests. You can translate protocols, collect statistics, add/remove/update headers, perform authentication and much more.

There are various pre-built filters already available for you to use (e.g., the envoy.filters.http.ratelimit filter that allows you to configure rate limiting for your services, or CSRF filter, CORS filter, and more). You should always check the latest version for what it supports before considering extending Envoy. However, you can also write your own filters and extend Envoy functionality.

Let’s take the example of adding an additional header to the request to introduce the options Envoy has for extensibility.

Native C++ Filters

One approach is to write your filter in Envoy’s native language, C++, and package it together with Envoy. This would require you to recompile Envoy and maintain your own version of it. To add a header, you’d have to write a new filter in C++ then link it with the Envoy binary. Here’s an example that demonstrates how to create a new filter that adds an HTTP header. Taking this route isn’t very practical for multiple reasons. You shouldn’t be maintaining your own version of Envoy just because of the filters you use.

Lua Based Filters

A second approach is to write filters using Lua scripts. An existing filter called HTTP Lua filter allows you to include a Lua script inline with the configuration. Here’s an example of what a configured Lua HTTP filter that adds a new header to the response would look like:

You could use this approach if the filter you’re creating is not too complex. You can write the Lua script directly in the YAML configuration or point to a script file. One downside of this approach is that either your script is part of the configuration or you have to figure out a way to copy the script file and make it accessible to the Envoy proxy. Both of these choices make it hard to develop and test. If your filter is more complex, you’re better off picking one of the other options.

Wasm-Based Filters

Another approach is to write an Envoy filter as a separate Wasm module and have Envoy dynamically load it during runtime.

At the moment, you can configure EnvoyFilter resource to load a Wasm module by pointing to a local .wasm file that’s accessible by the Envoy proxy. The second option is to use remote fetching and provide a URI. In this case, Envoy downloads the .wasm extension for you. Those using Istio can look forward to native Wasm support in version 1.11. Once available, sidecars can use Wasm modules published to an OCI-compliant registry such as via “getenvoy extension push.”

To recap, using Wasm allows you to use a different programming language to write your filter. (No need to write a native C++ filter!) And it doesn’t require you to recompile and maintain your own special version of Envoy. If we continue with the header example, we could write the extension in Go and add an additional header to the response in that code.

Wasm in Envoy

Envoy embeds a subset of a V8 virtual machine (VM). V8 is a high-performance JavaScript and WebAssembly engine that’s written in C++ and is used in Chrome and Node.js, along with other applications and platforms.

Envoy operates using a multithreaded model. That means there’s one main thread that is responsible for handling configuration updates and executing global tasks.

In addition to the main thread, there are also worker threads responsible for proxying individual HTTP requests and TCP connections. The worker threads are designed to be independent from each other. For example, a worker thread processing one HTTP request will not affect, and is not affected by, other worker threads processing other requests.

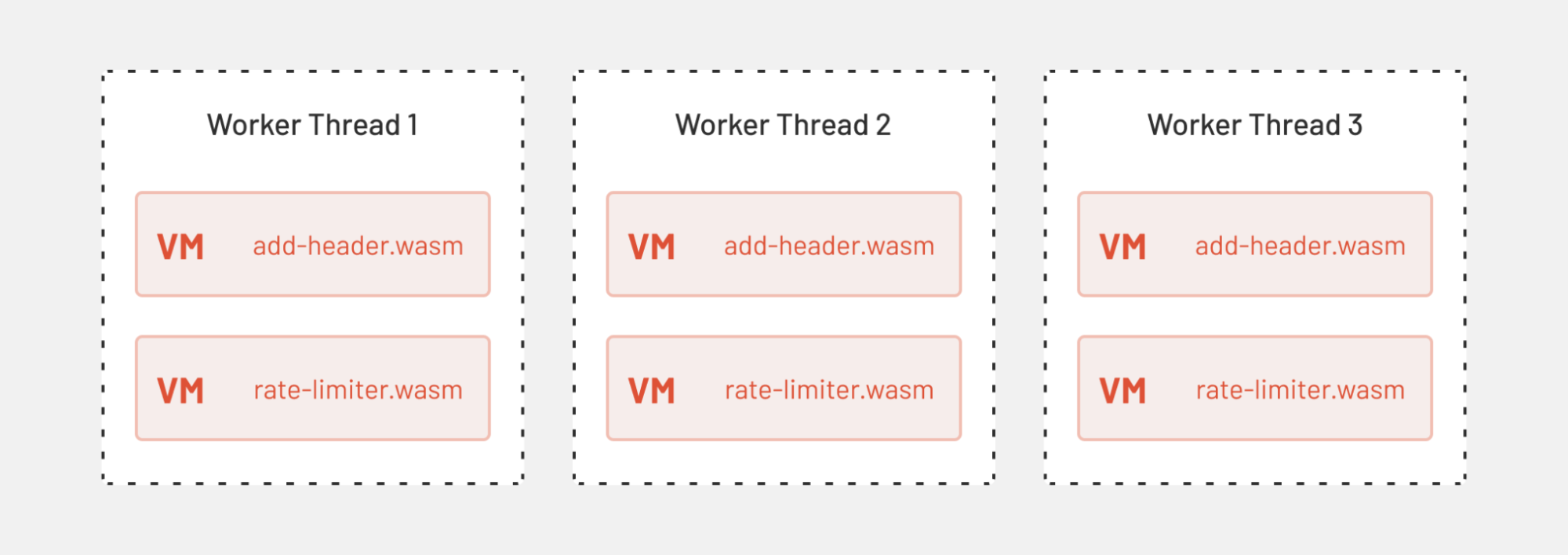

To avoid any expensive cross-thread synchronization in terms of higher memory usage, each thread owns its own replica of resources, which also includes the Wasm VMs.

The Proxy-Wasm extension gets distributed as a Wasm module. That means files with the .wasm extension. At runtime, Envoy loads every unique Wasm module (all *.wasm files) into a unique Wasm VM. Since Wasm VM is not thread-safe (i.e. multiple threads would have to synchronize access to a single Wasm VM), Envoy creates a separate replica of Wasm VM for every thread on which the extension will be executed. Consequently, every thread might have multiple Wasm VMs in use at the same time.

Let’s look at an example of how two extensions get loaded in worker threads. One sample extension is the add-header.wasm that adds a custom header, and the second one is a custom rate limiter we created called rate-limiter.wasm.

Wasm Service

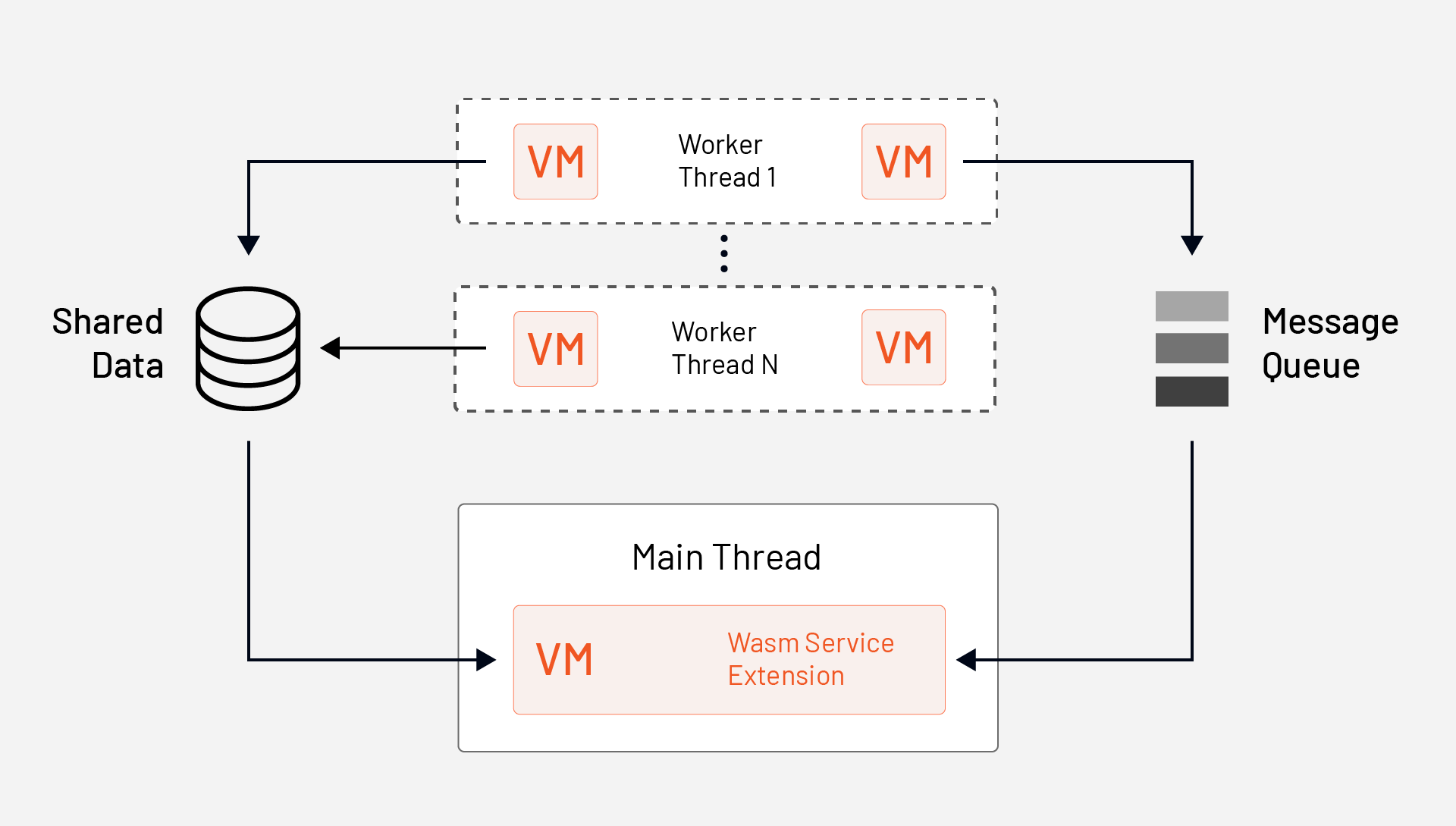

Wasm extensions can either be HTTP filters, network filters, access loggers or a dedicated extension type called WasmService. They get executed inside a Wasm VM on a worker thread. As we mentioned, the threads are independent, and they are inherently unaware of request processing that’s happening on other threads. They are also stateless.

However, Envoy also supports stateful scenarios. For example, you could write an extension that aggregates stats such as request data, logs or metrics across multiple requests, which essentially means across multiple worker threads. For this scenario you’d use the WasmService extension and an API for cross-thread communication (e.g., Message Queue and shared data).

Unlike HTTP filters, network filters or access loggers, WasmService extensions are not called as part of the request processing flow. Instead, they get executed on the main thread rather than worker threads. Because they are executed on the main thread, these extensions don’t affect request latency.

An example scenario for a WasmService extension would be to use the Message Queue API to subscribe to a queue and receive messages sent by the HTTP filter, network filter or access loggers from the worker threads. The WasmService extension can then aggregate data that are received from the worker threads.

WASMService extensions aren’t the only way to persist data. You can also call out to HTTP or gRPC APIs. Moreover, you can perform actions outside requests using the timer API (see an example here).

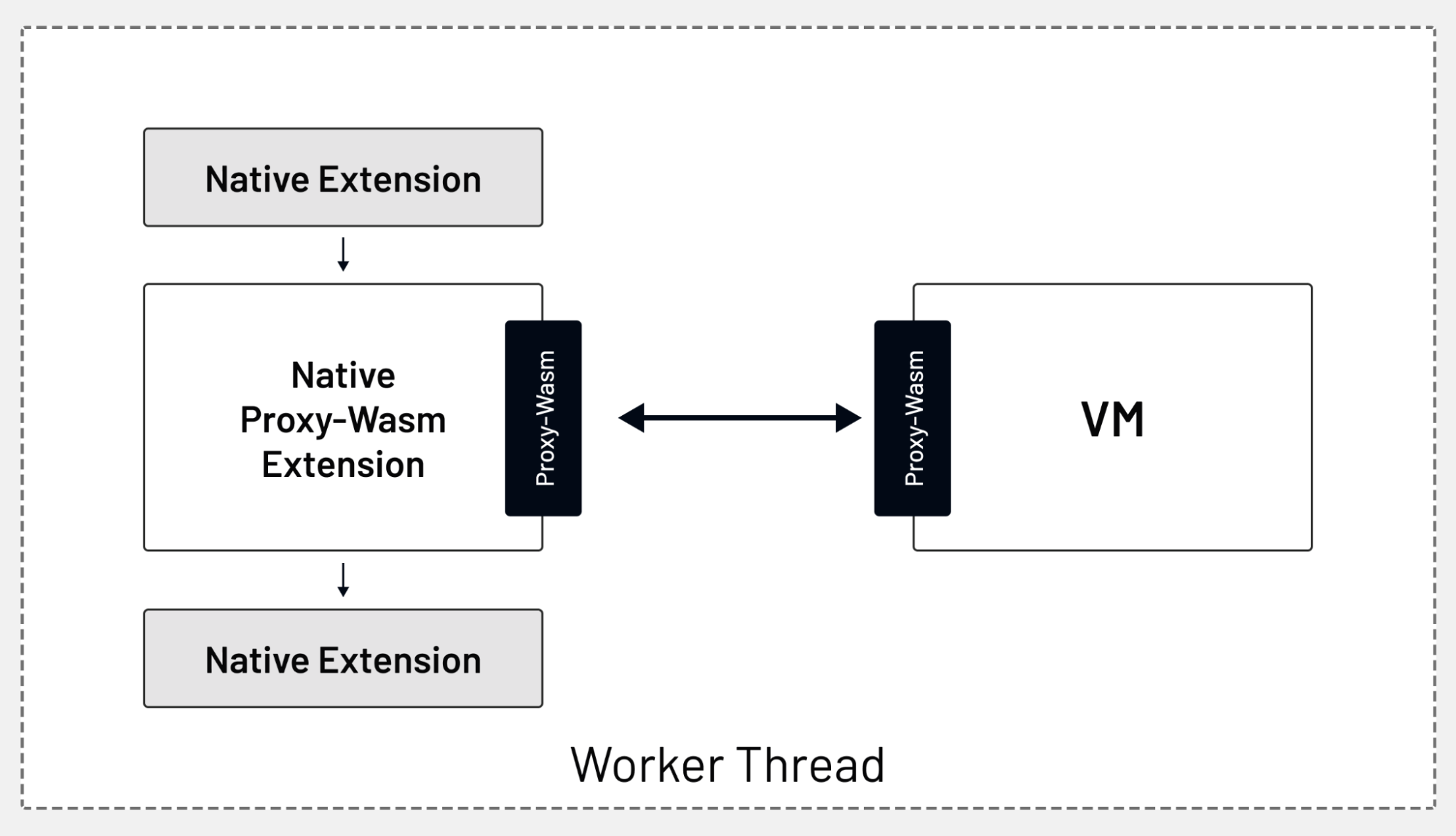

Proxy-Wasm

All of these APIs are defined by a component called Proxy-Wasm, a proxy-agnostic application binary interface (ABI) standard that specifies how proxies (host) and the Wasm modules interact. These interactions are implemented in the form of functions and callbacks.

The APIs in Proxy-Wasm are proxy-agnostic, which means they work with Envoy proxies as well as any other proxies (MOSN for example) that implement the Proxy-Wasm standard. This makes your Wasm filters portable between different proxies; they aren’t tied to Envoy only.

As the requests come into Envoy, they are being processed by the built-in filters. The data flows through the native or Lua filters and eventually goes through the Proxy-Wasm extension as shown in the figure below. Once the filter processes the data, the chain will continue — or stop, depending on the result from your filter.

Conclusion

This article introduced you to the world of Wasm modules. We started by explaining what Wasm is and what the original purpose for it was. Then we talked about different Envoy extensibility points: the native C++ filters, Lua-based filters and, of course, the Wasm-based filters.

In the next article, we’ll learn how to use GetEnvoy CLI to get started and quickly scaffold a Wasm module. We will dive a bit into the generated code to explain the lifecycle methods and the order in which they are executed once the Wasm filter starts and is processing the requests. Finally, we’ll show you how to use the EnvoyFilter resource to configure the Wasm module and deploy it to a Kubernetes cluster running Istio.

Read more

Technical resources:

Lead image via Pixabay.

Source: InApps.net

Let’s create the next big thing together!

Coming together is a beginning. Keeping together is progress. Working together is success.